Handling touchscreen or mouse events: Rotate Touch Handler

With the Mosaic class Core::RotateTouchHandler you can react to touchscreen or mouse device events. This so-called Rotate Touch Handler object can be used within your GUI component to add functionality to be executed when the user touches with the finger on the screen inside the boundary area of the Touch Handler and performs a gesture. If mouse device is available, the gesture can also be performed by using the mouse.

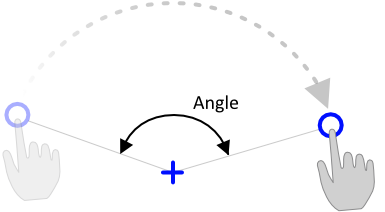

The Rotate Touch Handler is optimized to detect one finger rotation gestures. When the user touches the screen and drags the finger, the Touch Handler calculates from the movement the rotation angle around the center of its area and sends a signal to one of the slot methods associated to the Handler. Within the slot method your particular implementation is executed and the calculated angle can be evaluated. As such, this Handler is ideal to implement any kinds of wheel and rotary knob widgets. The following figure demonstrates the principle idea of a rotation gesture:

Please note, that the Mosaic framework provides additional Wipe Touch Handler and Slide Touch Handler, which implement other important gesture processors. Moreover, with the Simple Touch Handler you can process raw touch events or simple taps.

The following sections are intended to provide you an introduction and useful tips of how to work with the Rotate Touch Handler object and process touchscreen and mouse events. For the complete reference please see the documentation of the Core::RotateTouchHandler class.

Add new Rotate Touch Handler object

To add a new Rotate Touch Handler object just at the design time of a GUI component do following:

★First ensure that the Templates window is visible.

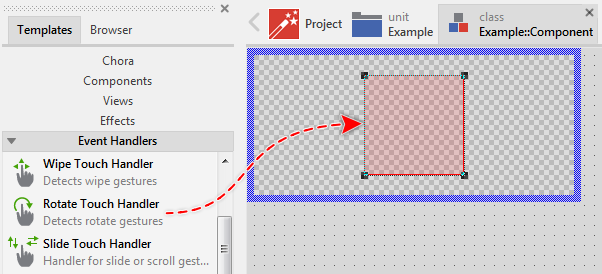

★In Templates window switch to the folder Event Handlers.

★In the folder locate the template Rotate Touch Handler.

★Drag & Drop the template into the canvas area of the Composer window:

★Eventually name the new added Handler.

IMPORTANT

Although being an event handler object and not a real view, the Touch Handler appears within the canvas area as a slightly red tinted rectangle. This effect exists just for your convenience and permits you to recognize the Touch Handler while assembling the GUI component. In the Prototyper as well as in the target device the Handler itself is never visible.

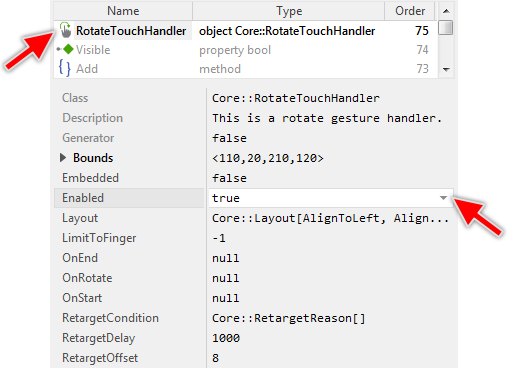

Inspect the Rotate Touch Handler object

As long as the Touch Handler object is selected you can inspect and modify its properties conveniently in the Inspector window as demonstrated with the property Enabled in the screenshot below:

This is in so far worth mentioning as all following sections describe diverse features of the Touch Handler object by explicitly referring to its corresponding properties. If you are not familiar with the concept of a property and the usage of Inspector window, please read first the preceding chapter Compositing component appearance.

Arrange the Rotate Touch Handler object

Once added, you can freely move the Touch Handler, or you simply grab one of its corners and resize it in this way. You can control the position and the size of the Handler also by directly modifying its property Bounds. If you want the Touch Handler to be arranged behind other views you can reorder it explicitly.

Although the Touch Handlers are't visible in the resulting application, their areas determine where the application should in fact react to touch interactions. Only when the user touches inside the Handler's boundary area, the Handler reacts to this interaction. Moreover, if two Touch Handlers overlap, the touch events are per default processed only by the Handler lying top most suppressing the Handler in the background. Thus the right arrangement of how Touch Handlers are placed within your application is an important aspect for its correct function.

Implement Handler slot methods

While the user touches inside the area of a Rotate Touch Handler, the Handler receives corresponding touchscreen events, which are then passed through its own gesture detector algorithm. As soon as the detector recognizes a rotation gesture, the Handler sends a signal to one of the slot methods connected with it. Within the slot method your particular implementation can react and process the event. The slot methods are connected to the Handler by simply storing them in the for this purpose available Handler's properties. The following table describes them:

Property |

Description |

|---|---|

Slot method associated to this property receives a signal just at the beginning of an interaction, in other words immediately after the user has touched the screen. |

|

Slot method associated to this property receives a signal just at the end of an interaction, in other words immediately after the user has released the touchscreen again. |

|

Slot method associated to this property receives signals every time the user drags the finger and the Handler recognizes from the movement a rotation gesture. This is true even if the finger has left the boundary area of the Touch Handler. In practice, as long as the user has not finalized the interaction, the OnRotate slot method will be signaled with every finger movement. |

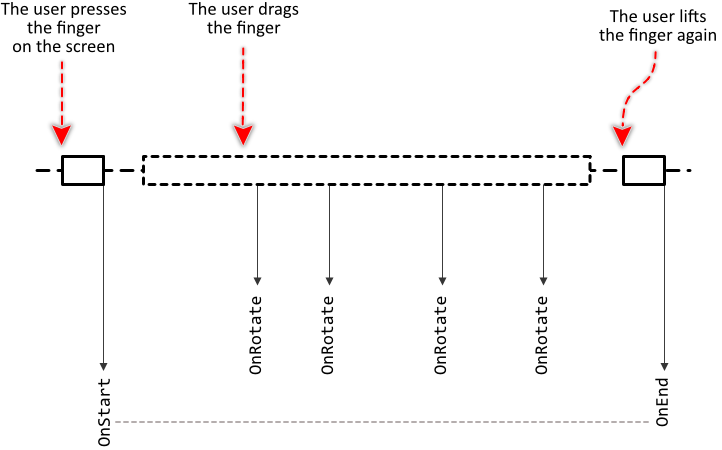

The following sequence diagram demonstrates a typical order in which the slot methods receive signals while the user interacts with the Touch Handler. Please note, that every interaction starts with a signal sent to the OnStart and ends with a signal sent to the OnEnd slot method. In the meantime the OnRotate slot method is signaled every time the user drags the finger:

Providing slot methods for all properties is not obligatory. If in your application case you want only the events generated by recognized rotation gestures being processed, then you leave all properties initialized with null except the property OnRotate. You can initialize the properties with already existing slot methods or you add a new one and implement it as desired. The following steps describe how to do this:

★First add a new slot method to your GUI component.

★To react to the start interaction event: assign the slot method to the property OnStart of the Handler object.

★To react to the end interaction event: assign the slot method to the property OnEnd of the Handler object.

★To react to the rotation gesture event: assign the slot method to the property OnRotate of the Handler object.

★Open the slot method for editing.

★In the Code Editor implement your desired operation to execute when the gesture is detected or the corresponding event occurs.

The Rotate Touch Handler object manages several variables reflecting its current state. You can evaluate these variables whenever your GUI component implementation requires the corresponding information. Usually, however, you do this from the above described slot methods. The following table provides an overview of the available variables:

Variable |

Description |

|---|---|

The variable is true if the Handler has recently received the press touch event without the corresponding release event. In other words, the user still holds the finger on the screen. |

|

The variable is true if the finger lies currently inside the boundary area of the Touch Handler or the user has finalized the interaction with the finger lying inside the area. |

|

The variable stores the angle corresponding to the position where the user recently touched the screen. The angle is expressed in degrees counted counter-clockwise. Accordingly, touching in the middle of the Handler's right edge results in an angle of 0 (zero) degree. In turn, touching in the top-left corner of the Handler's area corresponds to the angle 135 degrees. |

|

The variable stores the alternation of the angle relative to its value at the beginning of the interaction. The angle is expressed in degrees counted couner-clockwise. |

|

The variable stores the alternation of the angle relative to the preceding OnRotate event. This is useful if you need to incrementally track the finger movements. The angle is expressed in degrees counted counter-clockwise. |

|

The variable stores the position of the finger just at the beginning of the interaction. The position is expressed in coordinates relative to the top-left corner of the GUI component containing the Touch Handler. |

|

The variable is true if the current interaction has been taken over by another Touch Handler. See below Combine several Touch Handlers together. |

Whether and which variables your implementation does evaluate depends on your particular application case. The variable Down is useful if you have implemented a single slot method for both the OnStart and OnEnd events. In such case you can easily distinguish whether the method is called because of the user has begun or finalized the gesture. With the variables Angle, Relative and Delta you can calculate and track the angle alternations. By evaluating the variable Inside you can determine whether the finger is lying inside the area of the Touch Handler while the user performs the gestures. The following Chora code demonstrates few examples of how the various variables are used:

if ( !TouchHandler.Down ) { // The user has finalized the interaction. } if ( !TouchHandler.Down && TouchHandler.Inside ) { // The user has finalized the interaction while the // finger was inside the Touch Handler boundary area. } if ( TouchHandler.Relative >= 45 ) { // The user has performed a rotate left gesture with an angle // of at least 45 degree } if (( TouchHandler.Relative >= 45 ) && TouchHandler.Inside ) { // The user has performed a rotate left gesture with an angle // of at least 45 degree without leaving the boundary area of // the Touch Handler } if (( TouchHandler.Relative >= 45 ) && TouchHandler.Inside && !TouchHandler.Down ) { // The user has finalized the interaction after performing a // rotate left gesture with an angle of at least 45 degree // and without leaving the boundary area of the Touch Handler } var point destPos = ... // Within the OnRotate slot method let a WarpImage view rotate its // content accordingly to the angle resulting from the current touch // position WarpImageView.RotateAndScale( destPos, (float)TouchHandler.Angle, 1.0, 1.0 );

The following example demonstrates the usage of the Rotate Touch Handler to implement a rotary knob component. In this example, when the user touches the rotary knob and drags the finger, the rotary knob is rotated accordingly.:

Please note, the example presents eventually features available as of version 8.10

Configure the filter condition

Every time the user touches the screen, the Mosaic framework searches at the affected screen position for a Touch Handler, which is enabled and configured to accept the new interaction. Precisely, if the device does support multi-touch you can restrict a Handler to respond only to interactions associated with a particular finger number.

This setting is controlled by the property LimitToFinger. The fingers are counted starting with 0 (zero) for the first finger touching the screen and 9 for the tenth finger when the user uses all fingers simultaneously. Per default, this property is initialized with -1, which instructs the Handler to accept any interaction regardless of the number of fingers the user touches currently the screen with.

Initializing LimitToFinger with the value 0 (zero) restricts the Handler to accept the interaction associated with the first finger. In other words, the Handler will ignore any further interaction if the user touches already the screen with at least one finger. If this property is initialized with the value 4, the Handler will be activated only when the user touches the screen with the fifth finger.

The numbering of fingers is an aspect you have to take care of in the main software where the GUI application and your particular touchscreen driver are integrated together. Precisely, when you feed touchscreen events into the application by calling the method DriveMultiTouchHitting you should pass in its aFinger argument the number of the associated finger. This requires however, that the touchscreen driver in your device provides information to uniquely identify all fingers involved currently in touchscreen interactions.

Touch events and the grab cycle

When the user touches the screen, the corresponding press event is dispatched to the Touch Handler enclosing the affected screen position. With this begins the so-called grab cycle. The grab cycle means, that the Touch Handler becomes the direct receiver for all related subsequent touchscreen events. In other words, the Touch Handler grabs temporarily the touchscreen. However, if your device supports multi-touch, the grab cycle is limited to events associated with the finger, which has originally initiated the touch interaction. The grab cycle doesn't affect the events triggered by other fingers.

With the grab cycle the Mosaic framework ensures, that Touch Handler which has received a press event also processes the corresponding release event. Disabling the Touch Handler, hiding the superior component or removing its parent GUI component from the screen while the Handler is involved in the grab cycle does not rupture the event delivery. Similarly, when the user drags the finger outside the boundary area of the Touch Handler, the grab cycle persists and the Touch Handler will continue receiving all events associated with the finger.

The grab cycle ends automatically when the user releases the corresponding finger. With the end of the grab cycle the involved Handler will receive the final release event. The grab cycle technique is thus convenient if it is essential in the implementation of your GUI component to always correctly finalize the touchscreen interaction.

Under normal circumstances you don't need to think about the mechanisms behind the grab cycle. You should however understand that the Rotate Touch Handler after sending a signal to the slot method stored in its OnStart property will always send a signal to the slot method stored in OnEnd property as soon as the user finalizes the interaction.

Combine several Touch Handlers together

According to the above described concept of the grab cycle the touchscreen events are delivered to the Handler, which has originally responded to the interaction. This relation persists until the user lifts the finger again from the touchscreen surface and finalizes so the interaction. In other words, events generated by a finger are processed by one Touch Handler only.

This is in so far worth mentioning, as trying to arrange several Touch Handler one above the other with the intention to process with every Handler a different gesture will per default not work. Only the Handler lying top most will react and process the events. This Touch Handler, however, after having detected, that it is not responsible for the gesture can reject the active interaction causing another Touch Handler to take over it. In this manner, several Touch Handler can seamlessly cooperate.

With the property RetargetCondition you can select one or several basic gestures, the affected Touch Handler should not process. As soon as the Touch Handler detects, that the user tries to perform one of the specified gestures, the next available Handler lying in the background and willing to process the gesture will automatically take over the interaction. Following table provides an overview of the basic gestures you can activate in the RetargetCondition property and additional properties to configure the gesture more in detail:

Gesture |

Description |

|---|---|

WipeDown |

Vertical wipe down gesture. Per default the gesture is detected after the user has dragged the finger for at least 8 pixel. With the property RetargetOffset the minimal distance can be adjusted as desired. |

WipeUp |

Vertical wipe up gesture. Per default the gesture is detected after the user has dragged the finger for at least 8 pixel. With the property RetargetOffset the minimal distance can be adjusted as desired. |

WipeLeft |

Horizontal wipe left gesture. Per default the gesture is detected after the user has dragged the finger for at least 8 pixel. With the property RetargetOffset the minimal distance can be adjusted as desired. |

WipeRight |

Horizontal wipe right gesture. Per default the gesture is detected after the user has dragged the finger for at least 8 pixel. With the property RetargetOffset the minimal distance can be adjusted as desired. |

LongPress |

This gesture is detected when the user holds the finger pressed for a period longer than the value specified in the property RetargetDelay. This is per default 1000 milliseconds. |

Let's assume, you want to implement a GUI component to display within a Text view some text pages, the user can scroll vertically. With a wipe left and wipe right gestures the user should be able to browse between the various text pages. Moreover, a double tap within the page area should automatically scroll the text to its begin.

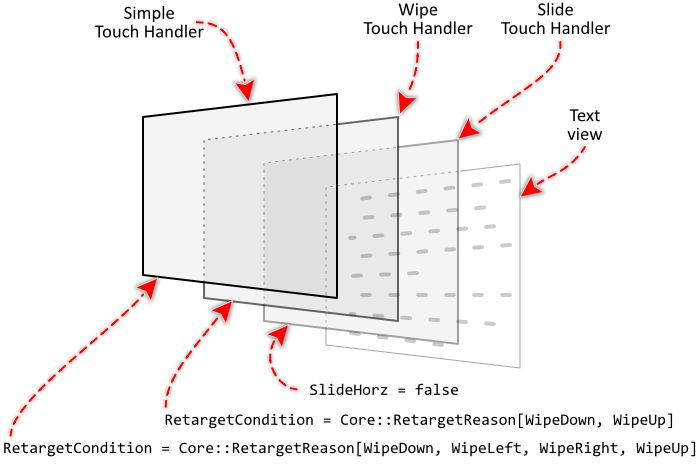

This example can be implemented by combining three Touch Handlers: the Slide Touch Handler to scroll the text vertically, the Wipe Touch Handler to detect the wipe left and wipe right gestures and to the regular Simple Touch Handler to detect the double taps. Following are the steps to configure these Handlers:

★Add a new Text view to your component.

★Add a new Slide Touch Handler to your component.

★Arrange the Slide Touch Handler so it covers the area of the Text view.

★Add a new Wipe Touch Handler to your component.

★Arrange the Wipe Touch Handler so it covers the area of the Text view.

★Add a new Simple Touch Handler to your component.

★Arrange the Simple Touch Handler so it covers the area of the Text view.

★For the Simple Touch Handler configure the property RetargetCondition with the value Core::RetargetReason[WipeDown, WipeLeft, WipeRight, WipeUp].

★For the Wipe Touch Handler configure the property RetargetCondition with the value Core::RetargetReason[WipeDown, WipeUp].

★For the Slide Touch Handler configure the property SlideHorz with the value false.

The following figure demonstrates the arrangement and the configuration of the three Touch Handlers:

When the user touches the GUI component, the events are primarily processed by the Simple Touch Handler. Later when the user drags the finger to the left, the Simple Touch Handler detects the wipe gesture and retargets the interaction to the Wipe Touch Handler. In turn, if the user has dragged the finger up, the Slide Touch Handler will take over the interaction since the Wipe Touch Handler is configured to also reject all wipe up and down gestures.

The following example demonstrates this application case:

Please note, the example presents eventually features available as of version 8.10

This so-called deflection mechanism is not limited to Touch Handlers within the same GUI component. When the Touch Handler decides to reject the actual interaction, all Touch Handler existing at the moment in the application and lying at the current touch position are asked in order to find the best one which should take over the interaction. The old and the new Touch Handler can thus belong to different GUI components.

Please note, that rejecting the active interactions finalizes the corresponding grab cycle for the old Touch Handler and initiates a new one for the new Touch Handler. Accordingly, the old Handler receives the final release event as if the user had lifted the finger from the screen and the new Handler receives the initial press event as if the user had just touched it. The both Handler act independently.

If you implement an OnEnd slot method for the Rotate Touch Handler, which may reject the interaction, you can evaluate its variable AutoDeflected. This variable is set true if the event is generated because the interaction has been taken over by another handler. Depending on your application case, you may then decide to not process the event. For example, a rotary switch should be activated only if the user really lifts the finger. In turn, when the interaction is taken over by another Handler, the rotary switch should restore its original visual aspect but it should not fire. Following code could be the implementation of the OnEnd slot method within a rotary switch component:

// Request the rotary knob component to restore its normal (not rotated) visual aspect InvalidateViewState(); // The event is generated because the interaction has been taken over by another // Handler. Ignore the event. if ( TouchHandler.AutoDeflected ) return; // Process the event. For example, execute some code depending on the rotation // direction if ( TouchHandler.Relative > 30 ) { // The user has rotated the switch to the left } if ( TouchHandler.Relative < -30 ) { // The user has rotated the switch to the right }

Rotate Touch Handler and multi-touch screen

The Rotate Touch Handler responds to the first touch interaction matching the specified condition. Afterwards, touching inside the Handler's area with a further finger is ignored. If there is another Handler lying in the background, then it has eventually the chance to respond and process the event. You can imagine, the Rotate Touch Handler is restricted to process at the same time events associated with only one finger.

Accordingly, to handle multi-touch interactions it is the simplest to manage several Touch Handlers within the application. For example, in an application containing many rotary knob widgets, the user can rotate the knobs simultaneously, similarly as it is possible with a control panel in a hi-fi system. Every rotary knob with its embedded Touch Handler will then individually process the touch events associated with the corresponding finger and detect the rotate gestures. From the user's point of view, the knobs can be controlled independent of each other.

Support for mouse input devices

Embedded Wizard developed applications are not restricted to be controlled by tapping on a touchscreen only. With the Rotate Touch Handler you can process also events triggered by a regular mouse input device. Accordingly, when the user clicks with the mouse inside the boundary area of a Touch Handler and drags the mouse down, the Handler will recognize a rotation gesture. Thus you can use Embedded Wizard to develop desktop applications able to run under Microsoft Windows, Apple macOS or diverse Unix distributions.

There are no essential differences when handling mouse or touchscreen events except the multi-touch functionality, which is not available when using the mouse device. Even mouse devices with more than one button can be handled. Per default, the left mouse button is associated to the first finger (finger with the number 0 (zero)). The right mouse button corresponds to the second finger and the middle button to the third finger. Knowing this, you can easily configure the Touch Handler to respond to the interesting mouse events.

In principle, in the main software where the GUI application and the particular mouse driver or OS API are integrated together you are free to map the received mouse events to the corresponding invocations of Embedded Wizard methods. Precisely, you feed the events into the application by calling the methods DriveCursorHitting and DriveCursorMovement.

Rotate Touch Handler and invisible components

Please note, the function of a Touch Handler is not affected by the visibility status of the GUI component, the handler is used inside. Embedded Wizard differentiates explicitly between the status ready to be shown on the screen and able to handle user inputs. As long as the handler lies within a potentially visible screen area, hiding the component will not suppress the touch handler from being able to react to user inputs. Thus if you want a GUI component to be hidden and to not be able to handle user inputs you have to hide and disable the component.

Generally, whether a GUI component is visible or not is determined by its property Visible. If the component is embedded inside another component, the resulting visibility status depends also on the property Visible of every superior component. In other words, a component is visible only if its property Visible and the property of every superior component are true. If one of these properties is false the component is considered as hidden.

In turn, the ability to react to user inputs is controlled by the component's property Enabled. Again, if the component is embedded inside another component, the resulting enabled status depends also on the property Enabled of every superior component. In other words, a component is able to handle user inputs only if its property Enabled and the property of every superior component are true. If one of these properties is false the component is considered as disabled.

IMPORTANT

A hidden component will receive and handle all user inputs as if it were visible. If it is not desired, you have to set both the property Visible and Enabled of the affected component to the value false.

Disable a Rotate Touch Handler

By initializing the property Enabled with the value false you can explicitly suppress a Touch Handler from being able to react to touchscreen inputs. In this manner, other Handlers lying behind it are exposed and can respond to the events.

Please note, that modifying Enabled while the Handler is currently involved in a grab cycle has no immediate effect. In other words, as long as the user does not finalize the previously initialized touchscreen interaction, the Handler will continue receiving events even if it has been disabled in the meantime. Finally, the grab cycle guarantees that Handler, which have received the press event will also receive the corresponding release event.

TIP

In the GUI application an individual Touch Handler can handle events only when the Handler and all of its superior Owner components are enabled, the Handler itself does lie within the visible area of the superior component and the Handler is not covered by any other Touch Handler.

Please note, Touch Handlers continue working even if you hide the superior components. See: Rotate Touch Handler and invisible components.